Plus ça change, plus c'est la même chose...

There have been a couple of significant enhancements to "queries" (the FOR EACH NO-LOCK statement and it's dynamic cousins):

- Since 10.2B06 -prefetch* startup parameters allow network messages to contain *much* more data, rather than a maximum of 16 records you can send thousands, and the first message can be full instead of containing only one record

- Since 11.6 -Mm only needs to be set on the server - no more need to track down *every* client startup to increase the message size

- TABLE-SCAN was added (11.?) to FOR EACH for cases where you are reading all (or most) of a table and don't need to pay attention to indexed order

- Server side joins roll out in oe12 with incremental improvements from release to release

- Multi-threaded servers with oe12 mean that you no longer need lots and lots of "server" processes

So to the degree that your application performance is sensitive to large, NO-LOCK, result sets being returned from the server to the client these changes can be hugely beneficial.

In fact, as Cringer alludes, it is possible that queries which take advantage of these features are actually faster over TCP/IP than they are in shared memory. That is because you are streaming a big hunk of data and there are two CPUs simultaneously working on the job - one on each end of the stream. The startup latency isn't notable in a case like that.

However...

The mechanics of a FIND statement have not changed and 4gl applications use a LOT of FIND statements. At best a FIND statement is a minimum of three network messages. The request, the response, and releasing the cursor. At worst it can be a LOT worse. Five, six, seven messages going back and forth for one record.

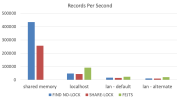

Some benchmarking:

Shared memory is obviously a lot faster for FIND statements.

The FOR EACH JOIN TABLE-SCAN (FEJTS) is an example of streaming a large result set. In that particular case the TCP/IP connection handily beat shared memory. (In real life you probably don't do that very often.)

"LAN default" and "LAN alternate" were two different ping times (0.058ms and 0.069ms). I tried to benchmark a third option with a WAN whose ping times were on the order to 35ms. I killed the benchmark after 18 hours,

In my benchmarking PING time is the most critical factor in FIND performance. You want to do whatever you can to minimize it. "Good" ping time is less than 0.1ms. If you pings are greater than 1ms that is not good. Tens of millseconds is horrific.

Remember - the speed of light is one foot per nanosecond. And signals travel through fiber at only two-thirds light speed. The more times that signal gets handed off through routers, switches, NIC cards, firewalls, and such; the more latency you are going to endure.

That means that you really want your clients and your servers to be physically close. If you are running in the cloud try to use Azure "proximity placement groups" or AWS "local zones".