parttime Admin

New Member

duh...

That's too bad. Is the vendor at least still providing support and bug fixes and willing to move you to a modern OE release?no,

no ABL compiler,

no pw for writing, read only PW for ODBC

I would prefer to

My plans regarding step 2 with progress means have been dismissed, so i guess i have to talk to my manufacturer to have him create some application-based synchronization workflow for maintenance scenarios like these. Think of denormalized ex/import or similar. They have hundreds of sites all in the same field of business (24/7) so this would be of universal use.

- D&L, rebuild, do whatever" on a recent copy of the db on server B (no downtime concern), while doing uninterrupted business with original db on server A.

- Then "synchronize" the newly refurbed instance on B "somehow" with all data that has been accumulated in the meantime on A

- Stop both, copy B to A, restart

The PUG method looks pretty cool, but is not nowhere near my skills.

Just a thought - you're on Windows and the backup takes 90 minutes. That seems a little long tbh. When you do the backup are you using the same filename each time, and are you backing up over the old backup file? If so, consider moving the old backup to the side, or using a unique file naming convention. There is a Windows "feature" where writing the backup over the top of an existing one is much slower than writing to a new file.Hello,

- Old Version was 10.2B (sorry)

- Daily full backup takes 90 min (online) WIN19,130GB RAM, 12*2 cores at 3 Mhz , VMWare7.1 (no system expert though)

- I keep an "archive instance" of db and Application on a dedicated server with all data from day one to end of 2023.

- However data retention could become a potential problem, being in the EU and handling personal information.

That's not my main concern at the moment though, but an argument nevertheless (no clear legal policy right now in my country)- Concerning my nerves, let me put it this way

"...This should speed up backup, ...TRAINING RUNS and therefore skill-building and thus save my nerves in the long run".- 12 hrs downtime is not possible. Like really not.

Would deleting and "emptying data blocks" at least free space for future growth? Better than nothing?

System is ever growing anyhow, as more recent data is stored than the amount of historic data, that could safely be deleted, because our business is growing.

Yes I do so and i will consider...Just a thought - you're on Windows and the backup takes 90 minutes. That seems a little long tbh. When you do the backup are you using the same filename each time, and are you backing up over the old backup file? If so, consider moving the old backup to the side, or using a unique file naming convention. There is a Windows "feature" where writing the backup over the top of an existing one is much slower than writing to a new file.

This won't solve the database size issue, but it may help with the backups.

If you are starting the backup using the Windows Task Scheduler beware that this defaults the task priority to low, killing backup performance even when the system is doing nothing else - see Progress Knowledge Base article P169922hmm

the 90 min were wrong (wishful thinking), usual duration is 160 min

so i started a backup after removing the last one, but so far it won't make a big difference

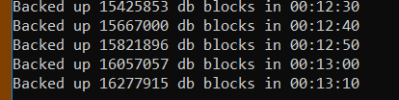

around 20 k Blocks/10sec @ 176 M Blocks total -> 135 min

View attachment 2998

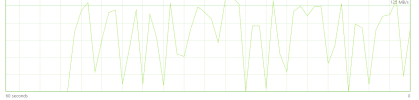

Disk transfer rate is oscillating, but maxes at about 125 MB/s

so i'm wonder if the backup is so slow that the disk has not much to do OR the disk sucks and therefore the backup takes so long

the same virtual disk is used by the aiarchivemanager btw (757 ai Files per day at 8-10 GB total)

View attachment 2999

actual cmd (called via script)

MyDLCDir\probkup online myDb F:\myBackupDir\myDb.bck -verbose -Bp 64