rzr

Member

OE10.1C / Windows 7.

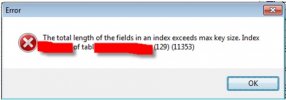

One of my team mate ran into error# 11353 while updating a field of a maintenance table we have.

here is the code:

Additional Information:

1. There are only two records in Table that match the WHERE clause.

2. The total length of fields in index is less than 1970 character (including value1 & value2)

3. Index is on Field1 + Field2 + Field3.

I found this issue strange because I would expect the FOR EACH to execute only twice as there were only two records that matched the WHERE clause. But instead I found that the FOR EACH kept iterating on the "first" record and kept updating the Field3 value until it ran into 11353 error.

I am not able to figure out why the FOR EACH will hold onto the first record and keep iterating on it

One of my team mate ran into error# 11353 while updating a field of a maintenance table we have.

The total length of the fields in an index exceeds max key size. Index <index-name> of table <table-name> (129) (11353)

here is the code:

Code:

FOR EACH Table EXCLUSIVE-LOCK

WHERE Table.Field1 = "xxx"

AND Table.Field2 = "yyy" :

ASSIGN Table.Field3 = Table.Field3 + ",value1,value2".

END.Additional Information:

1. There are only two records in Table that match the WHERE clause.

2. The total length of fields in index is less than 1970 character (including value1 & value2)

3. Index is on Field1 + Field2 + Field3.

I found this issue strange because I would expect the FOR EACH to execute only twice as there were only two records that matched the WHERE clause. But instead I found that the FOR EACH kept iterating on the "first" record and kept updating the Field3 value until it ran into 11353 error.

I am not able to figure out why the FOR EACH will hold onto the first record and keep iterating on it