pankajpatel23

New Member

Hello ALL,

System Configuration:

IBM AIX 5.3.0.0 64bit, 8 CPU machine

Memory: 58GB

Progress: 10.1C

Database size: 47.9 GB [by dbanalysis report]

152 GB [by promon -> 5. Activity]

Challenge: Binary dump of a table taking more then 7 hrs,so need your valuable inputs to optimize this time.

Table name: spt_det

Number of records in the table: 62026509

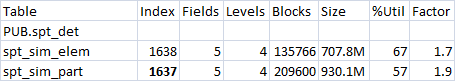

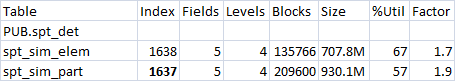

Index detail on this table:

Scenario 1:

Index Used: 1638

-thread: 1

Command: proutil $DBNAME -C dump spt_det $DMPDIR -index 1638 -thread 1 -threadnum 4 -dumplist $DMPDIR/spt_det.lst

Time taken to dump the data: ~9 hrs

Scenario 2:

Index Used: 1637

-thread: 0

Command: proutil $DBNAME -C dump spt_det $DMPDIR -index 1637 -thread 0 -threadnum 4 -dumplist $DMPDIR/spt_det.lst

Time taken to dump the data: ~11 hrs

Scenario 3:

Index Used: 1638

-thread: 0

Command: proutil $DBNAME -C dump spt_det $DMPDIR -index 1638 -thread 0 -threadnum 4 -dumplist $DMPDIR/spt_det.lst

Time taken to dump the data: ~7 hrs

So is there anyway , I can further bring down this dump time.

Thanks for your valuable inputs.

Regards,

Pankaj

System Configuration:

IBM AIX 5.3.0.0 64bit, 8 CPU machine

Memory: 58GB

Progress: 10.1C

Database size: 47.9 GB [by dbanalysis report]

152 GB [by promon -> 5. Activity]

Challenge: Binary dump of a table taking more then 7 hrs,so need your valuable inputs to optimize this time.

Table name: spt_det

Number of records in the table: 62026509

Index detail on this table:

Scenario 1:

Index Used: 1638

-thread: 1

Command: proutil $DBNAME -C dump spt_det $DMPDIR -index 1638 -thread 1 -threadnum 4 -dumplist $DMPDIR/spt_det.lst

Time taken to dump the data: ~9 hrs

Scenario 2:

Index Used: 1637

-thread: 0

Command: proutil $DBNAME -C dump spt_det $DMPDIR -index 1637 -thread 0 -threadnum 4 -dumplist $DMPDIR/spt_det.lst

Time taken to dump the data: ~11 hrs

Scenario 3:

Index Used: 1638

-thread: 0

Command: proutil $DBNAME -C dump spt_det $DMPDIR -index 1638 -thread 0 -threadnum 4 -dumplist $DMPDIR/spt_det.lst

Time taken to dump the data: ~7 hrs

So is there anyway , I can further bring down this dump time.

Thanks for your valuable inputs.

Regards,

Pankaj